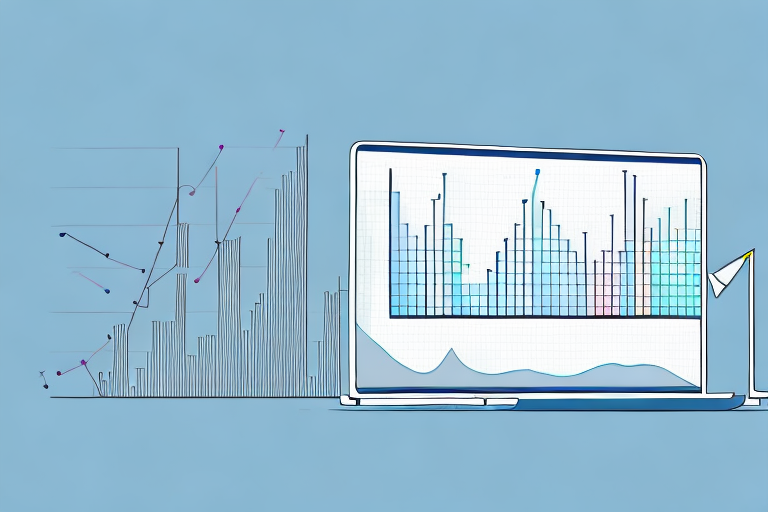

Histograms are a widely used and important data visualization tool used in many areas of research to gain insight and understanding about data. Histograms can provide valuable insight about the distribution and spread of data points, along with the standard deviation of data. With the ability to quickly understand a distribution, histograms can provide deep insight into any field of study. But how can one interpret a histogram and more specifically understand which histogram depicts a higher standard deviation? In this guide, we will provide a comprehensive overview of how to interpret histograms and how to determine the standard deviation of a histogram.

What is a Histogram?

A histogram is a graphical representation of data values. It is used to illustrate the shape and spread of data points collected from a given sample. Histograms are similar to bar graphs, but instead of showing individual values, they show the distribution of data points across a set of categories. To construct a histogram, givensums of the numerical data values are split into different categories. The Y-axis of a histogram represents frequency, while the X-axis represents the numerical value range.

Histograms are useful for visualizing the distribution of data points, and can be used to identify trends or patterns in the data. They can also be used to compare different data sets, or to compare the same data set over different time periods. Histograms are a great way to quickly and easily understand the distribution of data points.

How to Interpret Histograms

Interpreting histograms requires a basic understanding of the elements that make up an effective histogram. The height and shape of the bars tell us about the shape and variability of the data. If the shape is evenly distributed, it suggests that the data is evenly distributed and follows a normal distribution. If it is skewed, it suggests that not all of the data points are in the same range. In other words, the data is skewed to one side.

In addition to the shape of the histogram, the number of bars and the width of the bars can also provide insight into the data. The number of bars can indicate the number of categories or groups in the data, while the width of the bars can indicate the range of values in each group. By looking at the number of bars and the width of the bars, we can gain a better understanding of the data and how it is distributed.

What is Standard Deviation?

Standard deviation is a measure of the variability or spread of a set of numerical data. It is a measure of how far, on average, each data point is from the mean of all the points. In other words, it tells us how “spread out” the data points are from each other. It can be calculated by taking the square root of the variance for a particular dataset.

How to Determine the Standard Deviation of a Histogram

Standard deviation can be determined by looking at a histogram and determining the different ranges of data points that exist in each category. Greater variations in the frequency levels will indicate higher standard deviations. Generally speaking, if two histograms have the same y-axis value, but different x-axis values, then the one with higher x-axis values will indicate higher standard deviation.

Common Misconceptions About Interpreting Histograms

Many people believe that a histogram with higher frequency levels always indicates high standard deviation. This is not necessarily true as frequency levels do not offer an indication of spread with histograms. To determine standard deviation, analyze the different ranges of data points in each category.Benefits of Interpreting Histograms

Interpreting histograms allows us to gain greater insight into our data and make more informed decisions. By looking at how data points are distributed in different categories, we can understand how changes in one part of a system can affect the other parts. This can help us better manage our resources and make better decisions.

Tips for Easily Understanding Histograms

The following tips can help you easily understand histograms:

- Start by looking at the X and Y axes to determine what each axis represents.

- Look for patterns in the heights and shapes of the categories. Do any trends stand out?

- Look for outliers outside of the majority of data points.

- Are any categories particularly higher or lower than others?

- Check for any skewness in your data.

Examples of Different Types of Histograms

Histograms can vary greatly depending on the data they are displaying. Here are some examples of different types of histograms:

- A clustered histogram that shows how data points are distributed across several different categories.

- A cumulative histogram that displays how many data points are less than or equal to each numerical value.

- An overlapping histogram that allows comparison between two sets of data points.

By really understanding and interpreting each type of histogram, one can gain deep insight into their data.

In conclusion, this guide provided an overview of how to interpret histograms, determine standard deviation, and understand which histogram depicts a higher standard deviation. By correctly interpreting and understanding histograms, one can gain valuable insight into their data that can be used to make better decisions and more accurate predictions.