A normal distribution is a widely used probability distribution describing the likelihood of a certain range of outcomes for a given event. It is also commonly known as a Gaussian distribution, named after the famous mathematician Carl Friedrich Gauss (1777-1855). A normal distribution is characterized by its mean and standard deviation, which can be used to identify the relative variability of different probability distributions. In this article, we will discuss which normal distributions have the greatest standard deviation.

What Is a Normal Distribution?

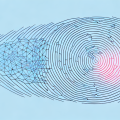

A normal distribution is a type of continuous probability distribution. It is defined by the probability density function, which describes the probability distribution of a continuous random variable over its range. This probability density describes the likelihood of any given outcome within its range. The most recognizable bell-shaped form of a normal distribution is called the Gaussian distribution, or Normal Distribution. It is typically represented by a bell curve graph that has a single peak in the center.

The normal distribution has several properties that make it ideal for use in many fields. For example, it is symmetric, meaning that it has a single mode (the highest point on the graph). The graph is also unimodal, meaning that it has one central peak and no other peaks or valleys.

Examining the Properties of the Normal Distribution

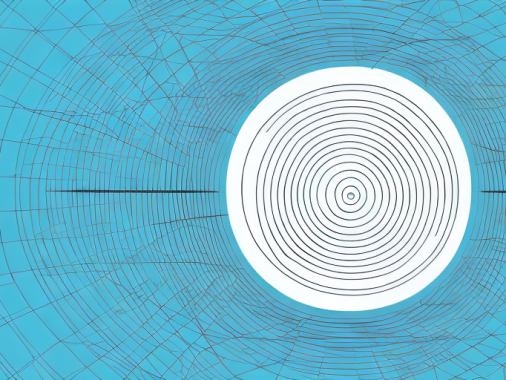

A normal distribution has two defining properties; the mean and standard deviation. The mean, or average value, is calculated by adding up all of the values in a given set of data, then dividing that sum by the number of values. The standard deviation is a measure of how far each value lies from that mean. In other words, it is a measure of how much variability there is in any given data set.

The normal distribution’s most important property is its ability to remain stable in a large range of circumstances. Its shape is determined by its properties of the mean and standard deviation, and these parameters can be adjusted to meet the needs of almost any type of data set.

Identifying the Standard Deviation of a Normal Distribution

Calculating the standard deviation of a normal distribution requires an understanding of its mean and variance. The variance is calculated by squaring each value in the data set, then subtracting the mean. The result is divided by the number of values to obtain the variance. The standard deviation is then calculated by taking the square root of this variance.

Once the standard deviation of a normal distribution is known, it can then be used to compare distributions for the purpose of identifying which one has the greatest standard deviation. For instance, two normally distributed sets of data can be compared by subtracting their standard deviations from one another. The larger difference indicates which set has the greater variability.

Factors That Affect the Standard Deviation of a Normal Distribution

The standard deviation of a normal distribution is determined by its mean and variance, but several other factors can influence it as well. For example, outliers can cause the standard deviation to be higher than normal, while values that are grouped closely together will result in a lower standard deviation. Changes in the mean, such as when new values are added to the data set, can also cause changes in the standard deviation.

Applications of the Normal Distribution with High Standard Deviations

High standard deviations in normal distributions are often seen in situations where there are extreme outliers in the data set. For instance, when studying the heights of people, there are usually a few individuals with heights that are significantly higher than most of the others. This would result in an abnormally high standard deviation for that population.

Highly variable populations are also often described by their high standard deviations. For example, stock prices are often described with a normal distribution with a high standard deviation since they can fluctuate greatly over time.

Strategies for Maximizing Standard Deviations in a Normal Distribution

When attempting to maximize standard deviations in a normal distribution, it is important to consider where sources of variability may come from. For example, if outliers are present within the data set, it may be beneficial to include them if they can be confidently identified and separated from normal values. On the other hand, if much of the variability is coming from extreme gaps between values, those gaps should be minimized.

Another strategy for maximizing standard deviations in a normal distribution is to look for sources of information that produce high variability in the data set. For example, if values tend to be close together, new sources of information may be gathered which can increase their range and so increase the standard deviation.

Conclusion

In conclusion, we have examined which normal distributions have the greatest standard deviation and discussed how this characteristic can be identified and compared between distributions. We have also discussed some strategies for increasing variability in normal distributions with low standard deviations and examined some applications where high variability may be beneficial.

While there are no definitive answers as to which normal distributions have the highest standard deviation, understanding how this characteristic can be identified and manipulated can help improve analysis and decision-making related to data sets. With the right strategies and techniques, it is possible to identify reliable distributions with great standard deviations.